Great application performance is always desirable as it impacts how your users perceive your application. This covers both the CMS and your frontend user experience.

Application performance has many benefits, the most notable are listed below:

- Better user experience—faster loading times are greatly appreciated by the end users

- Lower strain on your infrastructure—this can translate to lower monthly infrastructure costs

- Smoother development—day to day development can be impacted by performance as faster running times can lead to faster development iterations

Contents

Performance and layers

We recommend applying various layers of caching, from HTTP caching headers to caching whole responses to caching individual pieces of content, depending on the situation. This helps to separate performance concerns and decouple various performance aspects.

Note that before you dive in too deep, it’s important to understand your application and where its bottlenecks are likely to be. Profiling an application is a good way to do this, and there are a lot of resources about how to do that scattered across the internet. This will help you avoid premature optimisation, which is when you apply caching and other techniques where they’re not really needed. Premature optimisation wastes time and can also make an application more complex, making it harder to maintain. In the worst case, it can result in degraded performance instead of improving it.

That said, there are some common areas that are generally worth caching up front. Knowing what those are is a matter of experience that you will build up over time.

Caching

One way to improve performance is well configured caching capabilities in your application. Silverstripe framework offers multiple caching solutions out of the box on the application level, but there are also other caching solutions that can be added on top of your Silverstripe CMS application such as CDN. It’s also recommended to set up HTTP cache headers to improve caching capability.

The idea of caching is quite simple - frequently used data is stored in some sort of storage place of high availability so we can get to the data faster when we need it. This is a very simplified and generic description; in reality caching solutions are usually much more complex.

Per-request in-memory cache

One of the simplest cache solutions is the per-request in-memory cache. In this case, we store cached data in memory only so it persists only for the duration of the request currently being processed. This is still a fairly generic concept so let’s jump into a specific example.

Model in-memory cache

Silverstripe framework comes out of the box with model level in-memory cache. This solution is intended for frequent model lookups. To use this efficiently you need good awareness and understanding of your application code as you have to make the decision where this cache needs to be used.

Let’s look at some code examples. Both of these methods will fetch your model from the database.

// Cached method

DataObject::get_by_id()

// Cache free method

DataObject::get()->byID()The first one will use in-memory cache so it will only fetch data from the database when the model is not cached already. It’s up to you to decide which method is more suitable in a given situation. Our general recommendation is to use the cached method if you will hit the cache at least once on average. Failed lookups are also cached.

Let’s examine how cache keys are generated in the Silverstripe framework in more detail. Cache key generation is already pre-configured so you don’t have to do any configuration before using this cache. Cache keys need to be unique, so the default is to use model ID and model class as cache key components.

The DataObject::getUniqueKey() method allows the cache keys to be adjusted via an extension point. This allows the cache key generation to be altered as new components are added to the cache key. This is relevant for modules like silverstripe/versioned or tractorcow/silverstripe-fluent which introduce model variations such as:

- stage variation - model can be in draft & live stage, both have same model ID but they are in different stages with potentially different data

- locale variation - model can be in English locale and German locale, again potentially with different data

In both these examples the model ID is not unique enough, so these modules update the cache key to include the relevant information.

In-memory cache service

There are situations where model in-memory cache isn’t the right tool for the job. Sometimes you have a service which would greatly benefit from having an in-memory cache but the data doesn’t fit model level cache.

In the simplest cases you can just store the data in a static property on your class, but if you find yourself doing that in a lot of places, you might find having a specific service to hold that data for you would be useful.

Silverstripe framework provides a basic toolset for creating a service with an in-memory cache. As a first step we need a way to indicate that our PHP class has an in-memory cache. We can do this by adding a Resettable interface. Here is an example setup of a basic cache service:

class PerRequestCacheService implements Resettable

{

use Injectable;

private array $cache = [];

public function clearCache(): void

{

$this->cache = [];

}

public static function reset(): void

{

self::singleton()->clearCache();

}

}The Resettable interface provides an explicit API for developers indicating that in-memory cache is in use and how to access it. This is very useful when debugging issues as in-memory cache does increase the complexity of your code.

It also provides an important benefit for unit tests. Silverstripe framework will automatically call the reset() method of all services which implement the Resettable interface before each test case. This prevents data cached in-memory within one test from impacting another test.

The next crucial part which we need to cover is the cache key. It’s your responsibility to create a cache key which will be performant but also safe. Silverstripe framework provides you with a model cache key via the DataObject::getUniqueKey() method.

This is a safe and performant cache key for models. You can use this cache key directly or you can make it a part of your cache key. Let’s see how this is used on this example:

public function getPageRelatedData(Page $page): ?DataObject

{

$cacheKey = $page->getUniqueKey();

// Cold cache

if (!array_key_exists($cacheKey, $this->cache)) {

$this->cache[$cacheKey] = $this->loadData($page);// loadData() method executes the actual data lookup

}

return $this->cache[$cacheKey];

}You can learn about generating unique keys in the unique keys documentation.

Template caching

Silverstripe templates already come with cache built in for template variables for your ViewableData records. The example below shows two instances of the same template variable which will yield only a single method call during render.

<% if $FancyTitle %>

$FancyTitle

<% end_if %>You don’t need to set up any caching configuration, it just works out of the box. It’s important to be aware of this though so you don’t introduce redundant caching.

Partial template caching

Silverstripe framework provides a simple key-value storage capability which can be configured to work with various PSR-16 compliant storage options such as filesystem on a local disk or Redis.

This has implications on the data persistence as the key-value solution may not provide permanent storage capability. It’s recommended to always test a scenario of cold boot where your application has no data stored in the partial cache and it’s expected that it can handle the initial period of cache population..

Partial cache also has an expiry mechanism that keeps the cached data reasonably up to date.

Partial template caching is implemented using cache tags to wrap template blocks you want to cache. This code block showcases how the cache tag is used:

<% cached 'cache-key', $templateVariable, $methodCall %>

<p>This content is partially cached</p>

<% end_cached %>An arbitrary number of cache key segments can be passed in the cached block to specify a cache key. These can be hard coded values (such as “cache-key” above), template variables, or method calls.

More details about configuring and using this type of cache can be found in the partial template caching documentation.

It’s always important to properly configure cache lifetime instead of relying on default to match your project needs. Cache expiry can be easily changed via configuration - see the “cache storage” of the partial template caching documentation.

Caching in PHP

The PSR-16 caching solution is not limited to templates, you can create a new instance of the cache and use it in your PHP code directly as well. You can use Injector to attach partial cache to your service. Then you can access the partial cache property like so:

private static array $dependencies = [

'MyCache' => '%$' . CacheInterface::class . '.MyCacheIdentifier',

];

// Cache read

if ($this->MyCache()->has($cacheKey)) {

return $this->MyCache()->get($cacheKey);

}

// Cache write

$this->MyCache()->set($cacheKey, $data, 600);The cache identifier is important because it drives what configuration for partial cache is used. If you want different cache services to share the same cache property, please make sure to use the samecache identifier.

Further information about this type of caching including more configuration options can be found in the caching documentation.

Cache keys

There are some cases where generating cache keys is too hard or too demanding; for example rendering the content sometimes takes a similar amount of resources as generating a safe cache key. This typically happens when you have complex content dependencies.

Composite cache key example

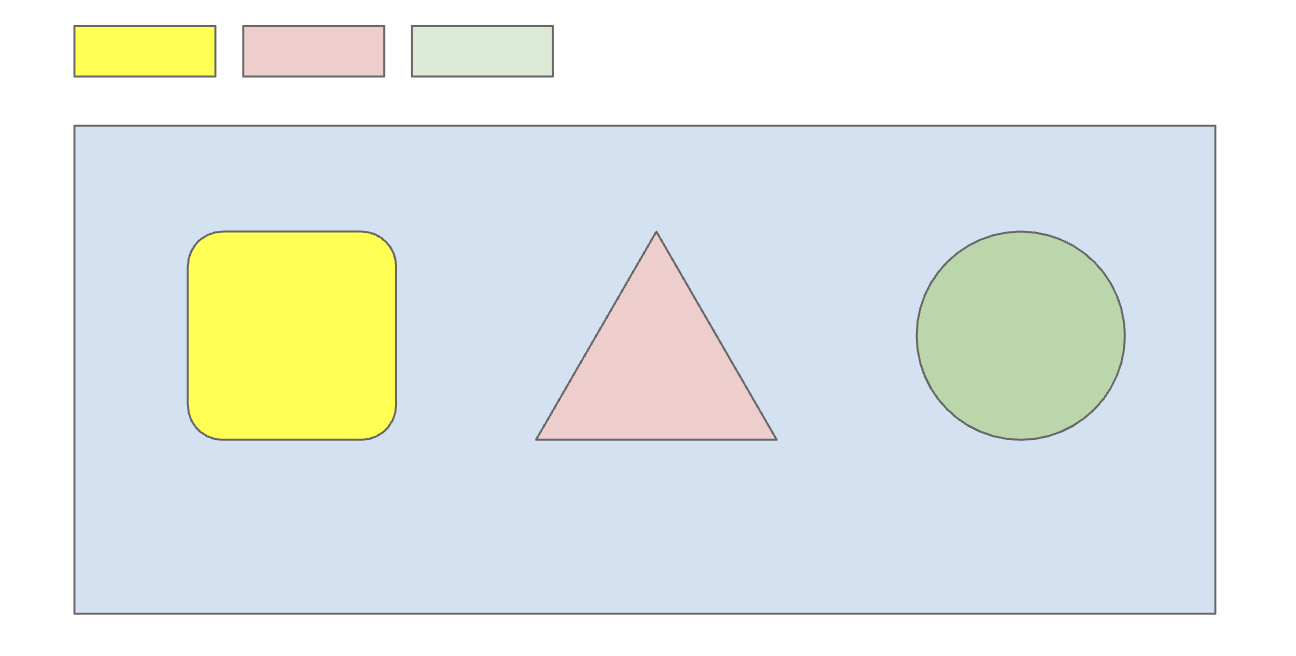

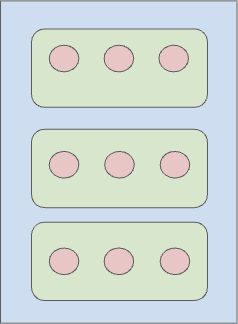

The image below represents a “cached” code block on a template that contains content taken from three different data sources, which are represented by the different shapes. The blue rectangle is the main model being rendered, with each of the smaller shapes being a child relation. At the top, the three rectangles represent the cache key for this model.

Each content source needs to be represented in the cache key so when the data source gets updated with new content the cache key will be invalidated. For this example that would require a minimum of three database queries - but it could easily be more. These composite cache keys can get really complicated for features like dashboards where a lot of data is being pulled from outside content blocks or a page.

silverstripe-terraformers/keys-for-cache is a Silverstripe CMS module which solves the cache key generation problem in a different way. Instead of generating complex cache keys on demand it uses an indexed cache key approach.

This means that cache keys are generated whenever content gets updated instead of dynamically at render time. Indexed cache keys are stored in separate data structures so they are easily accessible and also much more performant.

In order for this approach to work the content dependencies need to be configured so correct cache keys are generated. This is done in a similar way to how relations for the ORM is set up (has one, has many).

The module provides static configuration called “cares” and “touches”, these describe content dependencies. Models that have dependent content are linked so cache keys are invalidated at the correct time. The module has quite detailed documentation on how to set it up, I recommend checking it out if you are considering using it.

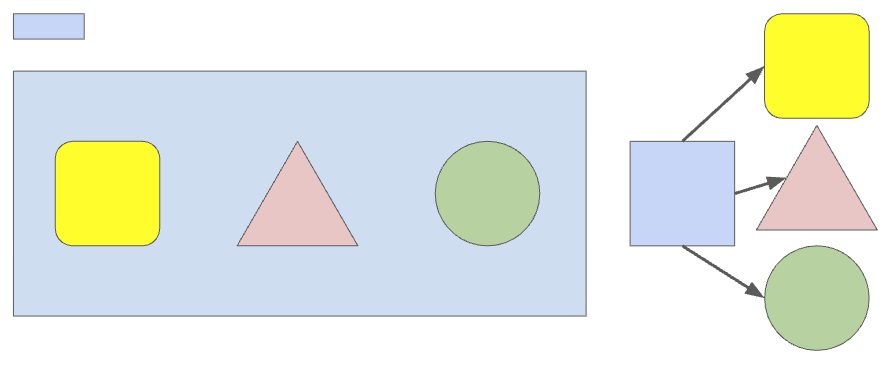

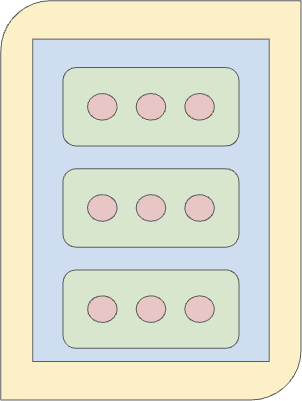

Let’s try to visualise how the setup works with the indexed cache key. In the example below, unlike the previous example, the blue model now relies on its own indexed cache key. In order for this key to work we need to configure the blue model to depend on the yellow, red and green model.

Persistent cache

Caching individual pieces of data can be very useful, though it has some drawbacks as well. These include its availability and lifespan, as well as the fact that a lot of processing still needs to occur around those cached elements.

silverstripe/staticpublishqueue provides the capability to statically cache your content at a HTTP response level. This usually covers pages but is not limited to them. For example caching API responses can also be done as well as caching sitemaps XML files.

Let’s look at how this cache works by examining page render.

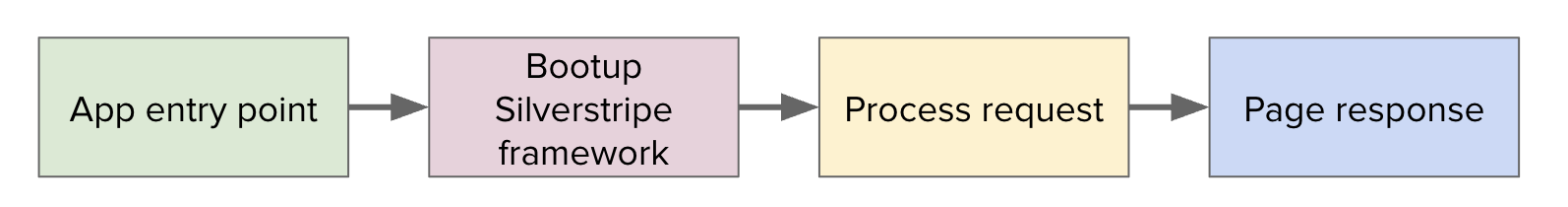

We have your application entry point here which is usually index.php. Silverstripe framework gets booted up. The request is processed (which is where most of the functionality - and response time - lies). Finally you get your page response.

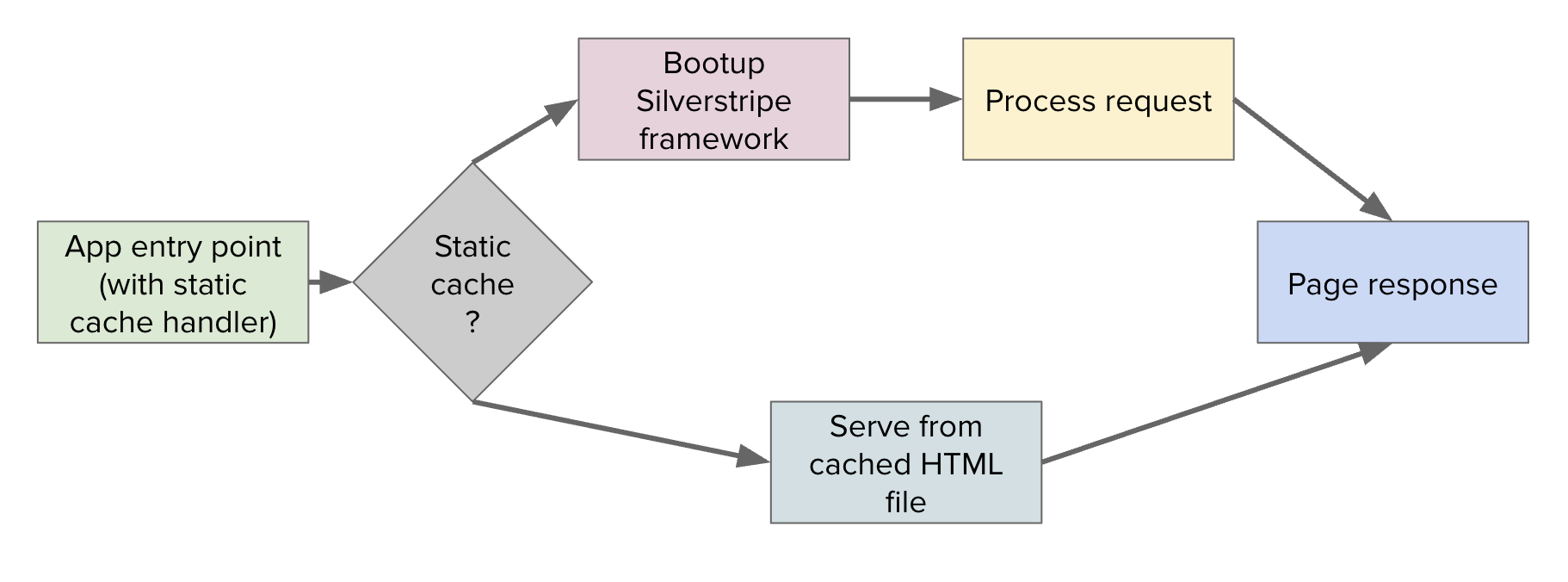

Now let’s look at the page render process with static caching in place.

We have the same entry point but this time it contains the static cache handler. If we don’t have the static cache for this page yet we will fall back to the regular page render process - but this time the response gets cached. If we do have the static cache for this page we will directly serve the cached response to the client.

The important bit here is that we are skipping the framework boot process and the request handling. This significantly improves the performance because among other things, this page render will not even connect to the database.

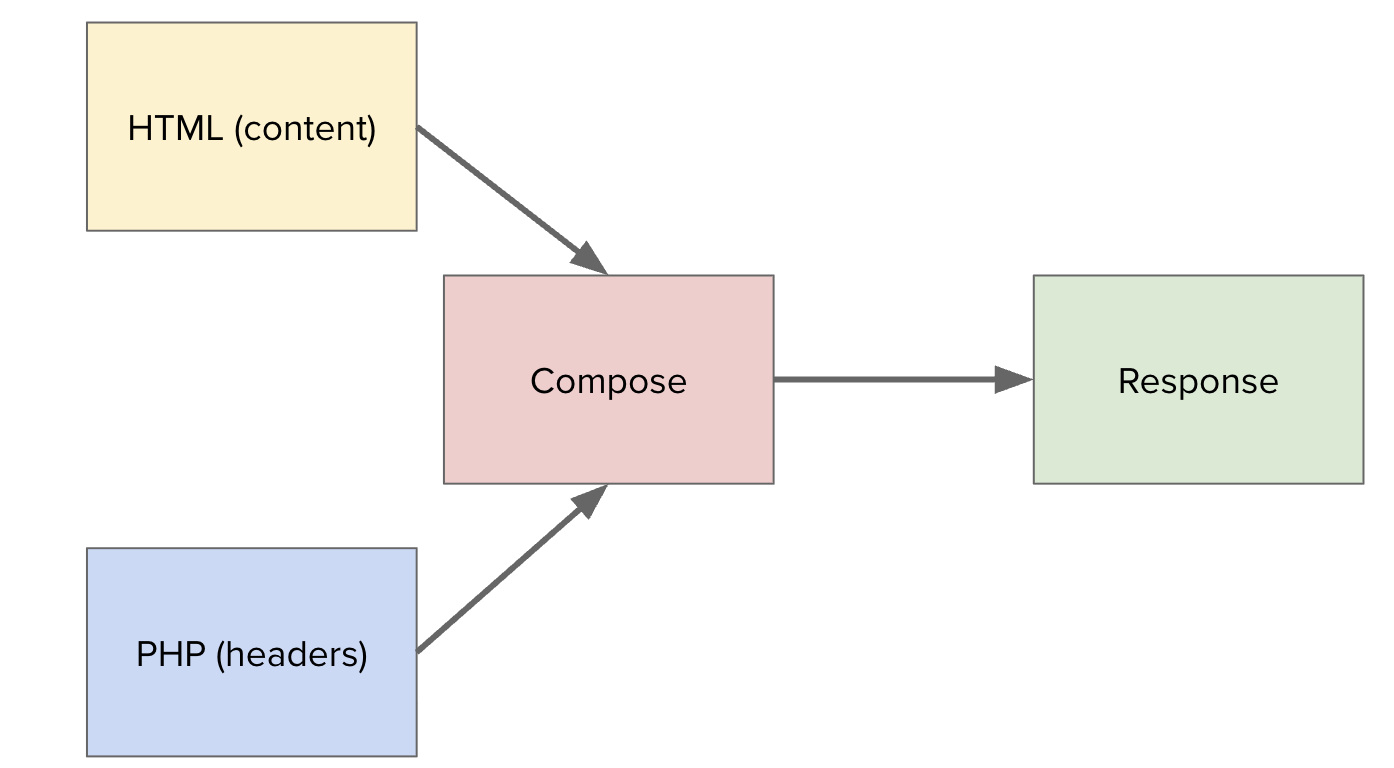

Let’s zoom in to the cached response itself.

The cached response consists of two parts - an HTML file and a PHP file. They need to be composed together to form the response. This composition process is very lightweight hence the massive performance improvement over the regular render.

Static cache files should be stored where they’re accessible by the application endpoint. The general recommendation is to use a similar approach as hosting content managed assets such as files and documents.

The module has several configuration options available, the most notable ones are related to:

- URL collection - which URLs need to have static cache

- Cache update triggers - which CMS actions need to be followed up with static cache update

It’s very important to get these two settings right in order to have static cache available for the URLs you care about and having these URLs updated in case the related content gets updated.

This module also provides a tool to re-cache the whole site to cover cases where incremental cache updates are not enough. Note that this re-cache option might not be the best fit for sites with a massive number of statically cached URLs. Such cases either need an incremental re-cache process that spans the whole site or a batched re-cache process which re-caches the site in smaller chunks.

Caching in a CDN

All caching solutions so far were focused on the application itself. To ensure your application is performant across the whole world we need to address some networking challenges.

Using a CDN (Content Delivery Network) service is highly recommended even for local applications as you likely need some of the security features like WAF (Web Application Firewall). CDNs provide a lot of utility but as a part of this blog post I will focus only on some basic aspects related to caching.

Good performance can be achieved by granular caching rules. For example you can set up different caching for different file types. You may want to cache images for much longer than your CSS files. Different caching rules can also be considered for different URL types. For example page URLs can be cached for longer compared to API responses.

Depending on the CDN you use you may have different tools available for busting the cache. Cache busting is useful in case you want to quickly update your site.

CDNs that provide their own API can be integrated with the Silverstripe framework and other Silverstripe modules such as static publisher and queued jobs. This integration can greatly improve content author experience during day to day work as they can be confident that the content update pipeline delivers their content changes to the website visitor in a timely manner.

An example would be the content update pipeline which covers content update, content publish, partial cache update, static cache update and finally a CDN cache update.

CDN integration can also provide some on-demand cache busting tools to cover edge case scenarios

An example for this would be an admin tool in the CMS to flush CDN for specific page types.

Performance pitfalls

Let’s have a look at some of the common mistakes that can hurt performance. It’s recommended to read through the performance documentation for additional context.

Wasted database queries

PHP execution is usually really fast compared to database query execution - not because the database is necessarily slow (though some queries can be), but because of the round trip to the database server and back. Optimising your application code to avoid wasting queries can help a lot especially if your codebase is large.

One of the common patterns is calling count() and then looping over the same DataList. Such cases should be reviewed as you usually need only one of these. If you only need to know how many items are in a list and don’t need the data, use only the count() method. If you need the loop you can count the items within the loop so you don’t need to use the count() method.

There is a similar case with looping over a list to check a single column. If you need a single field from the list, using the column() method is much faster as it doesn’t hydrate individual models. Model hydration is the usually most expensive part of the ORM data lookup so it’s worth reviewing - though do be aware that the column() method doesn’t call getter methods on your models, so in some edge cases the value you get may be different from what you expect. The example below shows how to get a specific database column value of a model more efficiently.

// ORM lookup with model hydration

$pageTitle = MyModel::get()->filter('<my_filters>')->first()->Title;

// ORM lookup without model hydration

$data = MyModel::get()->filter('<my_filters>')->limit(1)->column('Title');

$title = $data ? array_shift($data) : null;Note that with the exception of the get_by_id() method, the ORM will not cache any data lookups by default.

Low offload

One of the reasons for poor cache performance is low offload. This is a general metric that indicates how successful a cache is at serving cached data. How to get this metric depends on the cache type, for example CDNs usually have some dashboards showing this metric. It’s recommended to keep this metric under regular reviews and action any unexpected dips in the offload.

Unexpected offload patterns can be caused by various factors such as change of traffic pattern or new content published to the website. It can also be a result of tying a cache key to the wrong information - for example if you include some piece of data that changes for each request such as the current time, that cache key will never be repeated and therefore the cache won’t be used.

When caching is not the answer

Cache is usually a good solution for a performance problem but is not the only solution. It’s highly recommended to first inspect the performance issue and track down the root cause. In one of my projects we needed to increase the performance of a page render containing content blocks.

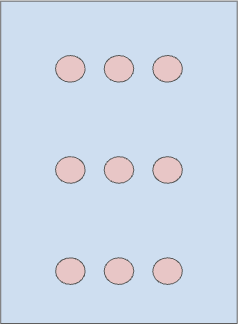

This is our page (the blue outer rectangle), it has content blocks (green inner rectangles) and content (red circles).

We added a file cache to the whole page (new yellow outer shape).

This sped up the page render significantly but increased code complexity significantly as well.

In the end we removed the file cache along with the content blocks and changed the page to use a single fixed template, where the page included the content directly.

In this case the page wasn’t content managed in our CMS anyway and the position of all the blocks was fixed. The root cause of the performance problem was incorrect implementation of this particular page type. Addressing the root cause ended up being a superior solution compared to adding a cache.

In general, not all areas of your application needs caching as this capability can degrade your feature, for example form submissions are usually not cached as they are user specific. Incorrectly integrated cache may even cause display issues by serving unexpected content.

Conclusion

It’s highly recommended that your application should use a caching solution that is best fit for your specific situation. You don’t have to have all caching solutions used though. Finally, some performance issues should be addressed directly, rather than worked around with caching.